Azure Media Services is a great tool for encoding all types of media. One of it’s major advantages is that it accepts a bunch of different input types (AMS supported file types). So you can almost agnostically give it a video file, and get an mp4 as output (amongst a myriad of other things it can do). However, with the current version, you cant get an information about a video file until AFTER it has been transcoded.

I wanted to get information about the input video file BEFORE it is transcoded….

Sure, there are packages that can do this (MediaInfo although you can’t get audio channel info, FFProbe, TaglibSharp, etc.), but most if not all require the file to be written to disk. That is a problem if you are looking at a byte array from blob storage, or want to get that information from a stream uploaded from a web client without writing it to disk.

So I applied a little hack to use AMS to get the audio and video metadata from a video file, and I need it quickly, so I don’t want to encode the entire video.

First, you need a JSON preset to perform the simplest (see fastest) of AMS tasks, generate a single thumbnail.

{

"Version":1.0,

"Codecs":[

{

"Type":"PngImage",

"PngLayers":[

{

"Type":"PngLayer",

"Width":640,

"Height":360

}

],

"Start":"{Best}"

}

],

"Outputs":[

{

"FileName":"{Basename}_{Index}{Extension}",

"Format":{

"Type":"PngFormat"

}

}

]

}

This will create a single thumbnail, and it will ask AMS to generate the “best” one, meaning it uses it’s brain to figure out the most relevant thumbnail, so you don’t get a blank one because the first few seconds of the video are black.

IJob metaDataJob = CreateJob(context, $"{inputAsset.Asset.Name}-Metadatajob");

IMediaProcessor processor = GetLatestMediaProcessorByName("Media Encoder Standard", inputAsset.GetMediaContext());

ITask task = job.Tasks.AddNew(taskName,

processor,

EncodingPreset.GetBaseThumnailOnlyEncodingPreset(), // this is the JSON from above,

TaskOptions.None);

task.InputAssets.Add(inputAsset);

task.OutputAssets.AddNew($"Thumbnail_{inputAsset.Name}", AssetCreationOptions.None);

await metaDataJob.SubmitAsync();

// Check job execution and wait for job to finish.

Task progressJobTask = job.GetExecutionProgressTask(CancellationToken.None);

progressJobTask.Wait();

// Get a refreshed job reference after waiting on a thread.

metaDataJob = GetJob(metaDataJob.Id, context);

// Check for errors

if (metaDataJob.State == JobState.Error)

{

return;

}

I have found that regardless of the video size or video length, this process takes an average of 40 seconds (if you have a media encoder unit available, and you should if you utilize this technique: Auto Scaling Media Reserved Units in AMS . And, so that not all is lost, you likely will have a use for this thumbnail if you are doing this sort of thing in the first place.

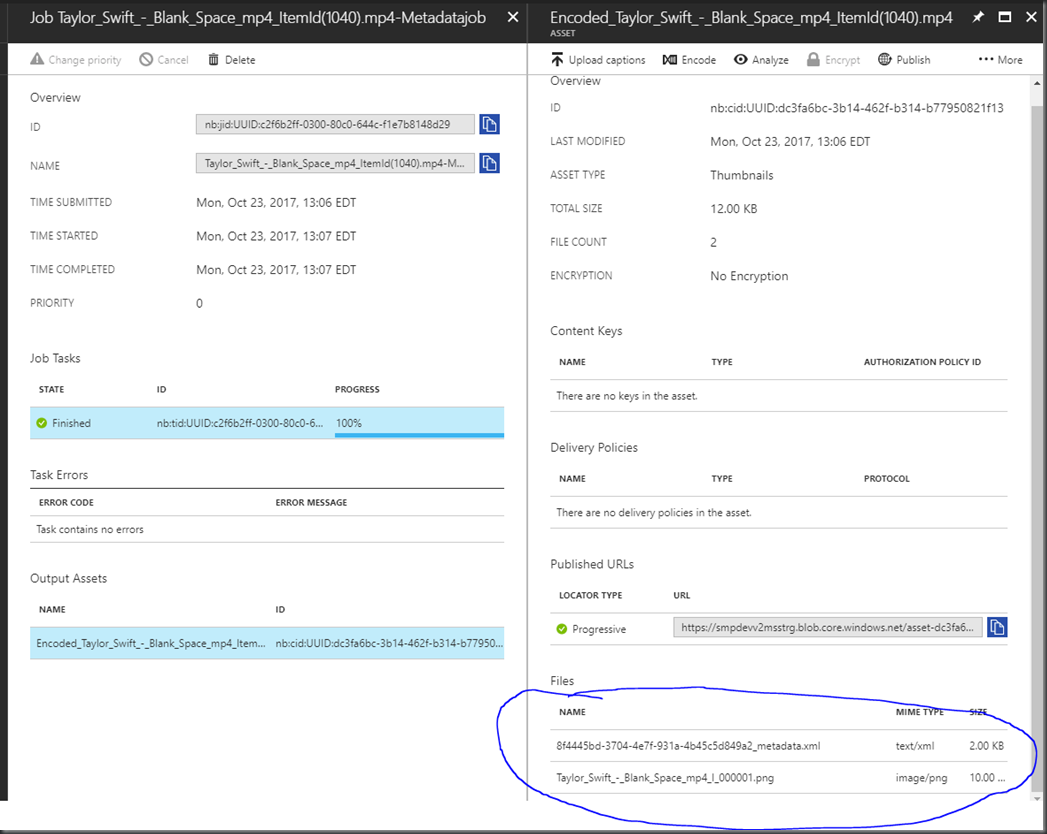

Once the job is complete, you can see that the output for the job is a single thumbnail, and an _metadata.xml file

Now we must assign a Sas locator to it so we can download that xml file:

IAsset outputAsset = job.OutputMediaAssets[0];

ILocator outputLocator = outputAsset.Locators.Where(l => l.Type == LocatorType.Sas).FirstOrDefault() ??

outputAsset.GetOrCreateLocator(LocatorType.Sas, AccessPermissions.Read | AccessPermissions.List | AccessPermissions.Write, AssetManager.CalculateExpirationDate(message));

IAssetFile outputAssetXmlFile = outputAsset.AssetFiles.Where(file => file.Name.EndsWith(".xml")).First(); //gets guid_metadata.xml

Uri xmlUri = outputAssetXmlFile.GetSasUri(outputLocator);

By the way, GetOrCreateLocator is an extension to help reuse locators since AMS limits you to only 5:

public static ILocator GetOrCreateLocator(this IAsset asset, LocatorType locatorType, AccessPermissions permissions, TimeSpan duration, DateTime? startTime = null, TimeSpan? expirationThreshold = null)

{

MediaContextBase context = asset.GetMediaContext();

ILocator assetLocator = context.Locators.Where(l => l.AssetId == asset.Id && l.Type == locatorType).OrderByDescending(l => l.ExpirationDateTime).ToList().Where(l => (l.AccessPolicy.Permissions & permissions) == permissions).FirstOrDefault();

if (assetLocator == null)

{

// If there is no locator in the asset matching the type and permissions, then a new locator is created.

assetLocator = context.Locators.Create(locatorType, asset, permissions, duration, startTime);

}

else if (assetLocator.ExpirationDateTime <= DateTime.UtcNow.Add(expirationThreshold ?? DefaultExpirationTimeThreshold))

{

// If there is a locator in the asset matching the type and permissions but it is expired (or near expiration), then the locator is updated.

assetLocator.Update(startTime, DateTime.UtcNow.Add(duration));

}

return assetLocator;

}

Ok, so now we have an xml file containing the metadata, and it looks like this:

<?xml version="1.0"?>

<AssetFiles xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://schemas.microsoft.com/windowsazure/mediaservices/2014/07/mediaencoder/inputmetadata">

<AssetFile Name="Taylor_Swift_-_Blank_Space_mp4_ItemId(1040).mp4" Size="62935612" Duration="PT4M32.463S" NumberOfStreams="2" FormatNames="mov,mp4,m4a,3gp,3g2,mj2" FormatVerboseName="QuickTime / MOV" StartTime="PT0S" OverallBitRate="1847">

<VideoTracks>

<VideoTrack Id="1" Codec="h264" CodecLongName="H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10" TimeBase="1/90000" NumberOfFrames="6531" StartTime="PT0S" Duration="PT4M32.355S" FourCC="avc1" Profile="High" Level="4.0" PixelFormat="yuv420p" Width="1920" Height="1080" DisplayAspectRatioNumerator="16" DisplayAspectRatioDenominator="9" SampleAspectRatioNumerator="1" SampleAspectRatioDenominator="1" FrameRate="23.980" Bitrate="1716" HasBFrames="1">

<Disposition Default="1" Dub="0" Original="0" Comment="0" Lyrics="0" Karaoke="0" Forced="0" HearingImpaired="0" VisualImpaired="0" CleanEffects="0" AttachedPic="0"/>

<Metadata key="language" value="und"/>

<Metadata key="handler_name" value="VideoHandler"/>

</VideoTrack>

</VideoTracks>

<AudioTracks>

<AudioTrack Id="2" Codec="aac" CodecLongName="AAC (Advanced Audio Coding)" TimeBase="1/44100" NumberOfFrames="11734" StartTime="PT0S" Duration="PT4M32.463S" SampleFormat="fltp" ChannelLayout="stereo" Channels="2" SamplingRate="44100" Bitrate="125" BitsPerSample="0">

<Disposition Default="1" Dub="0" Original="0" Comment="0" Lyrics="0" Karaoke="0" Forced="0" HearingImpaired="0" VisualImpaired="0" CleanEffects="0" AttachedPic="0"/>

<Metadata key="language" value="und"/>

<Metadata key="handler_name" value="SoundHandler"/>

</AudioTrack>

</AudioTracks>

<Metadata key="major_brand" value="isom"/>

<Metadata key="minor_version" value="512"/>

<Metadata key="compatible_brands" value="isomiso2avc1mp41"/>

<Metadata key="encoder" value="Lavf56.40.101"/>

</AssetFile>

</AssetFiles>

That has the information I am looking for! We can consume it now:

XDocument doc = XDocument.Load(xmlUri.ToString()); Dictionary<XName, string> videoAttributes = doc.Descendants().First(element => element.Name.LocalName == "VideoTrack").Attributes().ToDictionary(attribute => attribute.Name, attribute => attribute.Value); int width = Convert.ToInt32(videoAttributes["Width"]); int height = Convert.ToInt32(videoAttributes["Height"]); int bitrate = Convert.ToInt32(videoAttributes["Bitrate"]); Dictionary<XName, string> audioAttributes = doc.Descendants().First(element => element.Name.LocalName == "AudioTrack").Attributes().ToDictionary(attribute => attribute.Name, attribute => attribute.Value); int channels = Convert.ToInt32(audioAttributes["Channels"]);

or pull anything else out of that file that you want.

In my post about creating a custom bitrate ladder (see Creating a custom bitrate ladder from AMS), we need that information BEFORE we transcode so we can set the ceiling of our bitrate ladder.

I spoke with David Bristol from Microsoft about this (his blog has a bunch of great AMS related information David Bristol’s Media blog), and he agrees that something like the output of Media Info or FFMPEG’s ffprobe.exe would be great to run on an uploaded asset and is suggesting it to the AMS team, so hopefully we will see this kind of functionality in the future. 40 seconds isn’t great, so I hope we can improve on that, but this might get you to the dance for now.

Be First to Comment