It was time. The computer from my last super rig in 2009 needed an upgrade. That thing has been literally powered on for 9 years, and, as a matter of fact, it is still on (just not my primary dev machine anymore)… But, it no longer could handle the rigors of my natively compiled UWP development and media work I have been doing (well, at least not to the level of my patience). Since I had such good luck with it over the years, I decided to build my next rig this year, and its a doozy. I decided to go all out on this one. This post will highlight the specs.

Intel – Core i9-7980XE 2.6GHz 18-Core Processor (https://siliconlottery.com/collections/all/products/7980xe43g)

I got it from Silicon Lottery (https://siliconlottery.com/). I was planning on over clocking it (2.6Ghz isn’t all that great for single threaded performance), and for a few hundred extra bucks they de-lid it and guarantee it up to a certain amount of clock speed if you follow their specs. I got the one that was good up to 4.3Ghz. I am thoroughly convinced that my last rig has lasted so long because I took great care in keeping everything cool temperature wise, so de-lidding the processor was interesting to me, but not something I wanted to try myself with a two thousand dollar chip, so I left it up to them, and I think it was worth it. A lot of my specs after choosing this chip come from Silicon Lottery’s QVL.

Asus – ROG RAMPAGE VI EXTREME EATX LGA2066 Motherboard (https://www.asus.com/us/Motherboards/ROG-RAMPAGE-VI-EXTREME/)

This MOBO is beast mode. You don’t have a lot of choices when you get into the X299 socket in the first place. And to further limit this list, the Silicon Lottery QVL only allows this one plus 3 other possibilities. This is the most feature robust one in the list in my opinion, but it’s pricey…

G.Skill – Trident Z RGB 128GB (8 x 16GB) DDR4-2400 Memory (https://www.newegg.com/Product/Product.aspx?Item=N82E16820232553)

So why did I name it ARIES? Well, it is my birth sign for one. But more importantly, the mascot for Aries is…..RAM!!! That’s not a typo, 128 GB RAM. I’m not actually one for all the LED lighting and stuff, but since I got a clear case (more on that below), I figured I would get a blue motif going, and the memory can match, hence the RGB version. If you didn’t want the RGB, the Ripjaws are identical with a heat sink. I’m not sure I would ever buy any other brand of memory (fingers crossed). I have had G. Skill in all of my builds and it has been literally flawless.

AMD – Vega Frontier Edition Liquid 16GB Frontier Edition Liquid Video Card (https://pro.radeon.com/en/product/radeon-vega-frontier-edition/)

This build isn’t a gaming PC, so I didn’t get a gaming video card. It is for work. However, this card is unique in that it can run Professional, non-consumer, workstation drivers to develop, and can switch on the fly to gaming drivers to test what you developed, so it’s kind of a hybrid. It also has 16GB VRAM which is HMB2 instead of DDR, which is important for me to drive my 6 monitors. Everyone has an opinion on video cards, I’m not trying to start a debate. This one makes me pretty happy currently. It does have some pretty sweet looking blue LEDs on a gold frame as well. It’s also liquid cooled, and has a 120mm radiator. It supports up to 4 monitors natively, so I’m using a Club3d MST hub to get to 6.

(6 Acer 1920×1080, bottom row are touch screens)

(6 Acer 1920×1080, bottom row are touch screens)

Samsung – 960 EVO 1TB M.2-2280 Solid State Drive x 3 (https://www.samsung.com/us/computing/memory-storage/solid-state-drives/ssd-960-evo-m-2-1tb-mz-v6e1t0bw/)

A friend told me once that the feeling I got when I moved from HDD to SSD is ten fold going from SSD to M.2. I’m not sure if it’s quite that much, but these are fast, and they aren’t even the PRO’s. My MOBO holds 3 of them, so I got 3 TB’s worth. All of my hard drives in my last PC were Samsung, and not one of them has failed in 9 years, so I stuck with them.

EVGA – SuperNOVA T2 1600W 80+ Titanium Certified Fully-Modular ATX Power Supply (https://www.evga.com/products/product.aspx?pn=220-T2-1600-X1)

Another one of the reasons that I think my last rig lasted for so long was because I gave it a lot of headroom. No single component was being taxed to it’s limit. That Frontier Vega card can be an energy hog taking upwards of 350 watts, and so can the 18 core/36 thread overclocked chip. So I had to go with the biggest power supply I could find. Its fully modular, which I really like. Since I didn’t run any SATA drives or anything, I am only using the video card power supply and the 12V rail, and being modular meant I didn’t have as many cables to hide.

Thermaltake – Floe Riing RGB 360 TT Premium Edition 42.3 CFM Liquid CPU Cooler (http://www.thermaltake.com/products-model.aspx?id=C_00003122)

Going along with the “keep everything overly cool” theme, as well as the Silicon Lottery QVL calling for a 360mm radiator, this one was my choice. It was easy to install, looks really cool, and I even have the RGB’s giving me a visual indicator of the temperature of the CPU. As in, when it’s cool the lights are blue, and when it gets hotter it goes from green, yellow, and red (DANGER)! My CPU at idle is running about 28-29 degrees Celsius, and considering the 7980XE is supposed to be hot, I’m thrilled with that. Under Prime95 stress testing it doesn’t get hotter than 69 degrees after prolonged stress, and bitcoin mining with all 18 cores with MinerGate it hovers around 55-60 degrees. I used Thermaltake MX4 thermal paste per the QVL.

Cooler Master – COSMOS C700P ATX Full Tower Case (http://www.coolermaster.com/case/full-tower/cosmos-c700p/)

With the size of my components, I wanted a BIG case and believe me when I say it: this thing is HUGE. Having all the room helps with cooling, a lot of the parts are huge and would be cumbersome to fit into something smaller, and I have a lot of room on my desk to support a large case. The reviews on the case are, shall we say, mixed….But I have found it to be an extraordinary case. It looks really sharp, has a tempered glass door to show off your LEDs, the iconic COSMOS handles (although my final build weighs over 75 pounds, so I’m not taking it anywhere), and so far seems to be really well built.

Cougar – Dual-X 73.2 CFM 140mm Fan (https://www.amazon.com/Cougar-CFD14HBG-140mm-Cooling-Green/dp/B00C42TJ54)

As a personal rule, I always replace the stock fans with aftermarket ones that push more CFM. I also try to fill up every slot I can with an aftermarket fan. My old PC was LOUD from all the fans, it sounded like a helicopter taking off in office constantly. This one has more fans, and it is whisper quiet. Like, it’s almost weird it’s so quiet. You can occasionally hear the burble of the water block because it makes literally zero noise. These are blue LED (per my motif), and a hydraulic bearing, and I am quite pleased with them so far. I have my 360mm radiator blowing in, one of these on the bottom of the case blowing cold air in from the floor, one of these as an exhaust fan in the back, and 2 of these on the top as exhaust fans. I have the liquid cooled video card radiator blowing in. So, by some crude calculations, I have a slightly balanced pressure in my case.

Overclocking

Currently I am overclocked about 71%, running all 18 cores at 4.0Ghz. The QVL and warranty allow me to go up to 4.3, but I wanted a little headroom. And it is quite stable. I have few and far between lockups, and I am currently blaming the relatively new drivers for the video card, they appear to be the culprit anyway.

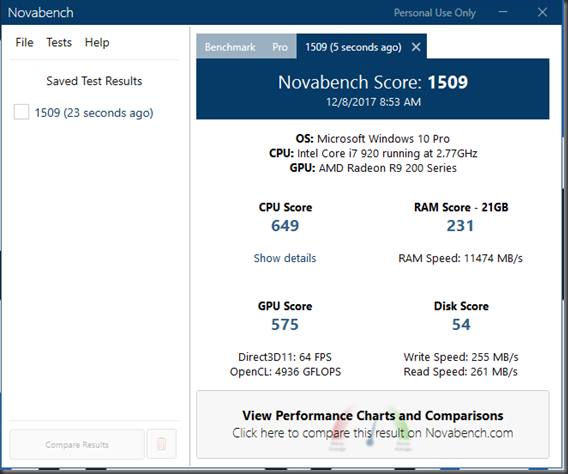

My old Novabench score:

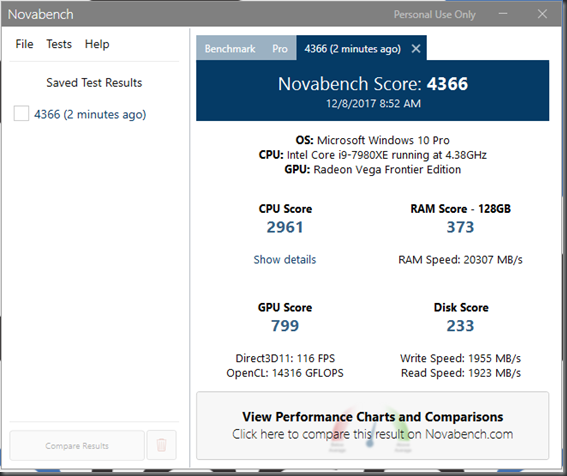

And my new Novabench score (picture is from a 4.3 Ghz overclock that my CPU is rated for, taking it to 4.0 barely affected the score really):

The improvement is INSANE. Considering my old PC was still a pretty good computer, Aries blows it away, and I can tell from daily use. Visual Studio loads up in a blink of an eye, and compiling our native UWP application went from 30 minutes, to about 4.

Total cost of the build was ~$8500 (not counting the monitors or audio stuff noted below). Full part list here: (https://pcpartpicker.com/user/lkrammes/saved/#view=vPVm8d)

I work for a collaboration company currently where we work a lot in the media space (http://collaborationsolutions.com/products/shared-media-player/), and we are scattered all over the world, so we spend a lot of time on Microsoft Teams video chatting. I wanted the freedom of being able to talk without a headset, so I have a fairly complex audio visual setup:

Creative Sound Blaster X7 (http://www.soundblaster.com/x7/) and sound setup

This is a full featured DAC, not just a sound card. You literally could run your home theater setup with it. I have it wired to 2 Bose studio monitors, and a 10 inch Polk Audio subwoofer (https://www.polkaudio.com/products/psw10). The sound quality is simply amazing. My audio engineer friend told me that it was the best sounding computer he has ever heard when we were tuning the EQ. To give me the freedom to walk around my office and talk, I have an Audio-Technica PRO 44 Cardioid Condenser Boundary Microphone mounted above my top row of monitors (http://www.audio-technica.com/cms/wired_mics/8ba9f72f1fc02bc5/index.html). It requires phantom power, and the X7 didn’t have enough juice to push it, so I had to add a phantom power supply (https://www.pyleaudio.com/sku/PS430/Compact-1-Channel-48V-Phantom-Power-Supply)

For the video, I went with the Logitech BRIO (https://www.logitech.com/en-us/product/brio) and man, this thing is sharp. The video quality is amazing. I get lots of comments on how clear I am in the video conference.

Das Keyboard 4 Professional (https://www.daskeyboard.com/daskeyboard-4-professional/) and Level 10 M Diamond Black Mouse (http://www.ttesports.com/Mice/39/LEVEL_10_M_Diamond_Black/productPage.htm?a=a&g=ftr#.WlV3w6inGUk)

I am a fan of the phrase “if you use something every day, buy the best one you can afford”. Just like a mechanic would want Snap-On or Mac Tools, I wanted a good keyboard and mouse. These are simply my favorites. The keyboard has Cherry MX mechanical keys that I find a joy to type with, and having a volume control knob at my fingertips is one of my favorite features. The mouse is a joint design effort from Thermaltake and BMW. For those that know me, they know my obsession with BMW…(love this mouse).

ROG Rapture GT-AC5300 Router (https://www.asus.com/us/Networking/ROG-Rapture-GT-AC5300/)

This thing is a beast as well. It’s super fast, and has a ton of range (I almost don’t need my access points in the house, of which I had 3 to get coverage everywhere). I currently have it plugged in to 2 ASUS AC68U’s (https://www.asus.com/us/Networking/RTAC68U/) as AP’s, one on each floor, router in the basement, to get full coverage all over my house and yard. Recently, ASUS announced that they are going to let the AC68U to be used as an AI Mesh node in conjuntion with the Rapture (https://www.asus.com/AiMesh/), which I will be experimenting with once the firmware gets out of beta. My neighbors tell me that my WiFi signal is higher in their house than their own router…

So, that’s my new system. So far I couldn’t be happier. What do you think?

Pre cable cleanup:

Post cable cleanup:

Ready to rock!

1 Comment